AUX_OBS Ground Observatory Data#

Abstract: We Demonstrate geomagnetic ground observatory data access through VirES - this is the AUX_OBS product distributed by BGS to support the Swarm mission, and contains data from INTERMAGNET and the World Data Centre (WDC) for Geomagnetism. Data are available as three collections: 1 second and 1 minute cadences (INTERMAGNET definitive & quasi-definitive data), as well as specially derived hourly means over the past century (WDC).

See also:

About the data#

The data are also available from the BGS FTP server (ftp.nerc-murchison.ac.uk/geomag/Swarm/AUX_OBS/). If that is more useful to you, you can refer to this older notebook demonstration of access to the FTP server.

Please note the data are under different usage terms than the Swarm data:

If you use the 1-second or 1-minute data, please refer to the INTERMAGNET data conditions

If you use the 1-hour data, please also refer to the WDC usage rules and cite the article about the preparation of these data:

Macmillan, S., Olsen, N. Observatory data and the Swarm mission. Earth Planet Sp 65, 15 (2013). https://doi.org/10.5047/eps.2013.07.011

Caution: The magnetic vector components have been rotated into the geocentric (NEC) frame rather than the geodetic frame, so that they are consistent with the Swarm data. This is in contrast with the data provided directly from observatories.

# Display important package versions used

%load_ext watermark

%watermark -i -v -p viresclient,pandas,xarray,matplotlib

Python implementation: CPython

Python version : 3.11.6

IPython version : 8.18.0

viresclient: 0.15.0

pandas : 2.1.3

xarray : 2023.12.0

matplotlib : 3.8.2

from viresclient import SwarmRequest

import matplotlib.pyplot as plt

Organisation of data in VirES#

AUX_OBS collection types#

Data are organised into AUX_OBSH (hour), AUX_OBSM (minute), AUX_OBSS (second) types. For example, to access the hourly data, use the collection name SW_OPER_AUX_OBSH2_.

request = SwarmRequest()

print(request.available_collections("AUX_OBSH", details=False))

print(request.available_collections("AUX_OBSM", details=False))

print(request.available_collections("AUX_OBSS", details=False))

{'AUX_OBSH': ['SW_OPER_AUX_OBSH2_']}

{'AUX_OBSM': ['SW_OPER_AUX_OBSM2_']}

{'AUX_OBSS': ['SW_OPER_AUX_OBSS2_']}

Within each collection, the following variables are available:

print(request.available_measurements("SW_OPER_AUX_OBSH2_"))

print(request.available_measurements("SW_OPER_AUX_OBSM2_"))

print(request.available_measurements("SW_OPER_AUX_OBSS2_"))

['B_NEC', 'F', 'IAGA_code', 'Quality', 'ObsIndex']

['B_NEC', 'F', 'IAGA_code', 'Quality']

['B_NEC', 'F', 'IAGA_code', 'Quality']

B_NECandFare the magnetic field vector and intensityIAGA_codegives the official three-letter IAGA codes that identify each observatoryQualityis either “D” or “Q” to indicate whether data is definitive (D) or quasi-definitive (Q)ObsIndexis an increasing integer (0, 1, 2…) attached to the hourly data - this indicates a change in the observatory (e.g. of precise location) while the 3-letter IAGA code remained the same

Data can be requested similarly to Swarm MAG products#

Note that the IAGA_code variable is necessary in order to distinguish records from each observatory

Note that there is a special message issued regarding the data terms

Let’s fetch all the variables available within the 1-minute data, from two days:

request = SwarmRequest()

request.set_collection("SW_OPER_AUX_OBSM2_")

request.set_products(["IAGA_code", "B_NEC", "F", "Quality"])

data = request.get_between("2016-01-01", "2016-01-03")

ds = data.as_xarray()

ds

Accessing INTERMAGNET and/or WDC data

Check usage terms at ftp://ftp.nerc-murchison.ac.uk/geomag/Swarm/AUX_OBS/minute/README

<xarray.Dataset>

Dimensions: (Timestamp: 295200, NEC: 3)

Coordinates:

* Timestamp (Timestamp) datetime64[ns] 2016-01-01 ... 2016-01-02T23:59:00

* NEC (NEC) <U1 'N' 'E' 'C'

Data variables:

IAGA_code (Timestamp) <U3 'ABG' 'ABK' 'API' 'ARS' ... 'VSS' 'WIC' 'WNG'

Longitude (Timestamp) float64 72.87 18.82 171.8 58.6 ... 316.4 15.86 9.073

Quality (Timestamp) <U1 'D' 'D' 'D' 'Q' 'D' 'D' ... 'D' 'D' 'Q' 'D' 'D'

Latitude (Timestamp) float64 18.5 68.23 -13.73 ... -22.26 47.74 53.56

B_NEC (Timestamp, NEC) float64 3.804e+04 197.1 ... 764.8 4.623e+04

Radius (Timestamp) float64 6.376e+06 6.36e+06 ... 6.367e+06 6.364e+06

F (Timestamp) float64 4.297e+04 5.33e+04 ... 4.85e+04 4.962e+04

Attributes:

Sources: ['SW_OPER_AUX_OBSM2__20160101T000000_20160101T235959_010...

MagneticModels: []

AppliedFilters: []Above, we loaded the data as an xarray Dataset, but we could also load the data as a pandas DataFrame - note that we should use expand=True to separate the vector components of B_NEC into distinct columns:

df = data.as_dataframe(expand=True)

df

| IAGA_code | Longitude | Quality | Latitude | Radius | F | B_NEC_N | B_NEC_E | B_NEC_C | |

|---|---|---|---|---|---|---|---|---|---|

| Timestamp | |||||||||

| 2016-01-01 00:00:00 | ABG | 72.870 | D | 18.503863 | 6.375973e+06 | 42974.178420 | 38043.065649 | 197.10 | 19986.653510 |

| 2016-01-01 00:00:00 | ABK | 18.823 | D | 68.225754 | 6.360062e+06 | 53297.873523 | 10737.698005 | 1715.90 | 52176.822927 |

| 2016-01-01 00:00:00 | API | 171.781 | D | -13.726025 | 6.376929e+06 | 38804.092366 | 32474.849888 | 6910.60 | -20084.454605 |

| 2016-01-01 00:00:00 | ARS | 58.600 | Q | 59.231110 | 6.362618e+06 | 56202.047989 | 15439.829840 | 3848.14 | 53902.445874 |

| 2016-01-01 00:00:00 | ASC | 345.624 | D | -7.896461 | 6.377908e+06 | 28516.997217 | 19779.633516 | -5398.80 | -19820.145984 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 2016-01-02 23:59:00 | VIC | 236.583 | D | 48.325950 | 6.366376e+06 | 53761.882434 | 17905.402463 | 5324.30 | 50412.184986 |

| 2016-01-02 23:59:00 | VNA | 351.718 | D | -70.562545 | 6.359150e+06 | 38373.585718 | 18053.865463 | -4436.60 | -33569.429593 |

| 2016-01-02 23:59:00 | VSS | 316.350 | Q | -22.264742 | 6.375511e+06 | 23289.026042 | 16765.212732 | -6976.26 | -14582.118244 |

| 2016-01-02 23:59:00 | WIC | 15.862 | D | 47.736545 | 6.367486e+06 | 48503.461985 | 20844.849807 | 1427.50 | 43772.597647 |

| 2016-01-02 23:59:00 | WNG | 9.073 | D | 53.559282 | 6.364323e+06 | 49624.347736 | 18016.450576 | 764.80 | 46232.007071 |

295200 rows × 9 columns

Use available_observatories to find possible IAGA codes#

We can get a dataframe containing the availability times of data from all the available observatories for a given collection:

request.available_observatories("SW_OPER_AUX_OBSM2_", details=True)

Accessing INTERMAGNET and/or WDC data

Check usage terms at ftp://ftp.nerc-murchison.ac.uk/geomag/Swarm/AUX_OBS/minute/README

| site | startTime | endTime | Latitude | Longitude | Radius | |

|---|---|---|---|---|---|---|

| 0 | AAA | 2005-01-01T00:00:00Z | 2015-12-31T23:59:00Z | 43.058011 | 76.920 | 6.369442e+06 |

| 1 | AAE | 1998-01-01T00:00:00Z | 2013-12-31T23:59:00Z | 8.975526 | 38.766 | 6.380055e+06 |

| 2 | ABG | 1997-01-02T00:00:00Z | 2023-12-31T23:59:00Z | 18.503863 | 72.870 | 6.375980e+06 |

| 3 | ABK | 1997-01-02T00:00:00Z | 2026-01-20T23:59:00Z | 68.267960 | 18.800 | 6.360051e+06 |

| 4 | AIA | 2004-01-01T00:00:00Z | 2023-12-31T23:59:00Z | -65.103358 | 295.730 | 6.360536e+06 |

| ... | ... | ... | ... | ... | ... | ... |

| 146 | VSS | 1999-01-01T00:00:00Z | 2025-12-31T23:59:00Z | -22.264742 | 316.350 | 6.375511e+06 |

| 147 | WIC | 2015-01-01T00:00:00Z | 2026-01-20T23:59:00Z | 47.736545 | 15.866 | 6.367486e+06 |

| 148 | WNG | 1997-01-02T00:00:00Z | 2026-01-20T23:59:00Z | 53.541246 | 9.053 | 6.364345e+06 |

| 149 | YAK | 2009-01-01T00:00:00Z | 2018-12-31T23:59:00Z | 61.800024 | 129.660 | 6.361609e+06 |

| 150 | YKC | 1997-01-02T00:00:00Z | 2023-12-31T23:59:00Z | 62.324005 | 245.518 | 6.361545e+06 |

151 rows × 6 columns

We can also get a list of only the available observatories during a given time window:

print(request.available_observatories("SW_OPER_AUX_OBSM2_", '2016-01-01', '2016-01-02'))

Accessing INTERMAGNET and/or WDC data

Check usage terms at ftp://ftp.nerc-murchison.ac.uk/geomag/Swarm/AUX_OBS/minute/README

['ABG', 'ABK', 'API', 'ARS', 'ASC', 'ASP', 'BDV', 'BEL', 'BFO', 'BLC', 'BMT', 'BOU', 'BOX', 'BRD', 'BRW', 'BSL', 'CBB', 'CKI', 'CLF', 'CMO', 'CNB', 'CSY', 'CTA', 'CYG', 'DED', 'DLT', 'DMC', 'DUR', 'EBR', 'ESK', 'EYR', 'FCC', 'FRD', 'FRN', 'FUR', 'GAN', 'GCK', 'GDH', 'GNG', 'GUA', 'GUI', 'HAD', 'HBK', 'HER', 'HLP', 'HON', 'HRB', 'HRN', 'HUA', 'HYB', 'IPM', 'IRT', 'IZN', 'JCO', 'KAK', 'KDU', 'KEP', 'KHB', 'KNY', 'KOU', 'LER', 'LON', 'LRM', 'LYC', 'LZH', 'MAB', 'MAW', 'MBO', 'MCQ', 'MGD', 'MMB', 'NAQ', 'NEW', 'NGK', 'NVS', 'OTT', 'PAG', 'PET', 'PST', 'RES', 'SBA', 'SBL', 'SFS', 'SHU', 'SIT', 'SJG', 'SOD', 'SPG', 'SPT', 'STJ', 'SUA', 'TAM', 'TDC', 'THL', 'THY', 'TSU', 'TUC', 'UPS', 'VIC', 'VNA', 'VSS', 'WIC', 'WNG']

Use IAGA_code to specify a particular observatory#

Subset the collection with a special collection name like "SW_OPER_AUX_OBSM2_:<IAGA_code>" to get data from only that observatory:

request = SwarmRequest()

request.set_collection("SW_OPER_AUX_OBSM2_:ABK")

request.set_products(["IAGA_code", "B_NEC", "F", "Quality"])

data = request.get_between("2016-01-01", "2016-01-03")

ds = data.as_xarray()

ds

Accessing INTERMAGNET and/or WDC data

Check usage terms at ftp://ftp.nerc-murchison.ac.uk/geomag/Swarm/AUX_OBS/minute/README

<xarray.Dataset>

Dimensions: (Timestamp: 2880, NEC: 3)

Coordinates:

* Timestamp (Timestamp) datetime64[ns] 2016-01-01 ... 2016-01-02T23:59:00

* NEC (NEC) <U1 'N' 'E' 'C'

Data variables:

IAGA_code (Timestamp) <U3 'ABK' 'ABK' 'ABK' 'ABK' ... 'ABK' 'ABK' 'ABK'

Longitude (Timestamp) float64 18.82 18.82 18.82 18.82 ... 18.82 18.82 18.82

Quality (Timestamp) <U1 'D' 'D' 'D' 'D' 'D' 'D' ... 'D' 'D' 'D' 'D' 'D'

Latitude (Timestamp) float64 68.23 68.23 68.23 68.23 ... 68.23 68.23 68.23

B_NEC (Timestamp, NEC) float64 1.074e+04 1.716e+03 ... 5.198e+04

Radius (Timestamp) float64 6.36e+06 6.36e+06 ... 6.36e+06 6.36e+06

F (Timestamp) float64 5.33e+04 5.331e+04 ... 5.32e+04 5.32e+04

Attributes:

Sources: ['SW_OPER_AUX_OBSM2__20160101T000000_20160101T235959_010...

MagneticModels: []

AppliedFilters: []Magnetic models can be evaluated at the same time#

The VirES API treats these data similarly to the Swarm MAG products, and so all the same model handling behaviour applies. For example, we can directly remove the CHAOS core and crustal model predictions:

request = SwarmRequest()

request.set_collection("SW_OPER_AUX_OBSM2_:ABK")

request.set_products(

measurements=["B_NEC"],

models=["'CHAOS-internal' = 'CHAOS-Core' + 'CHAOS-Static'"],

residuals=True

)

data = request.get_between("2016-01-01", "2016-01-03")

ds = data.as_xarray()

Accessing INTERMAGNET and/or WDC data

Check usage terms at ftp://ftp.nerc-murchison.ac.uk/geomag/Swarm/AUX_OBS/minute/README

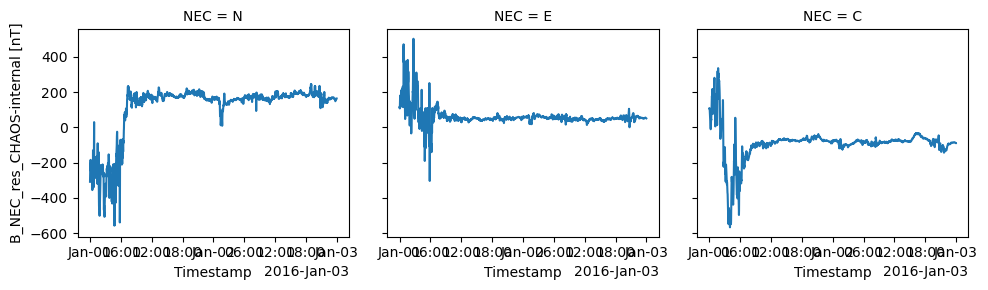

ds["B_NEC_res_CHAOS-internal"].plot.line(x="Timestamp", col="NEC")

<xarray.plot.facetgrid.FacetGrid at 0x7fd69ee0f5d0>

(This roughly shows the disturbance sensed by the observatory due to the magnetospheric and ionospheric sources)

Data visualisation#

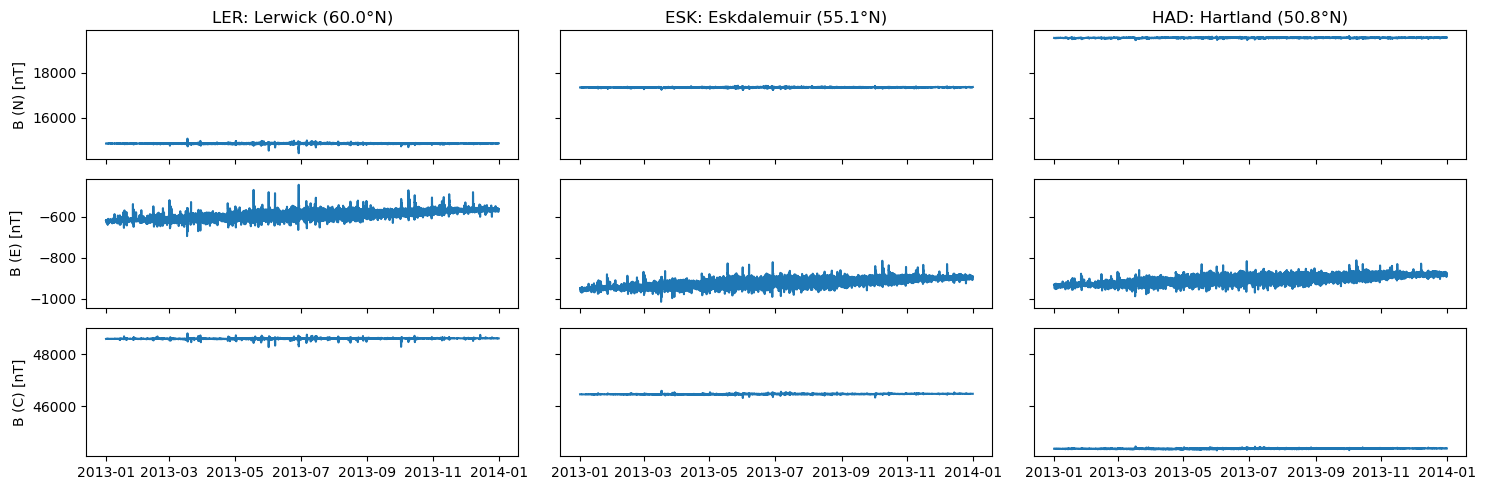

Three observatories over a year#

Let’s run through a visualisation of one year of hourly means from three observatories.

First fetch the data from our chosen observatories across the UK: LER (Lerwick), ESK (Eskdalemuir), HAD (Hartland). We can apply a few optional settings to reduce unnecessary output:

verbose=Falseto disable the data terms messageasynchronous=Falseto enable synchronous processing on the server - it will be slightly faster but only works for smaller data requestsshow_progress=Falseto hide the progress bars

request = SwarmRequest()

request.set_collection("SW_OPER_AUX_OBSH2_:LER", verbose=False)

request.set_products(measurements=["B_NEC"])

data = request.get_between("2013-01-01", "2014-01-01", asynchronous=False, show_progress=False)

ds_ler = data.as_xarray()

request = SwarmRequest()

request.set_collection("SW_OPER_AUX_OBSH2_:ESK", verbose=False)

request.set_products(measurements=["B_NEC"])

data = request.get_between("2013-01-01", "2014-01-01", asynchronous=False, show_progress=False)

ds_esk = data.as_xarray()

request = SwarmRequest()

request.set_collection("SW_OPER_AUX_OBSH2_:HAD", verbose=False)

request.set_products(measurements=["B_NEC"])

data = request.get_between("2013-01-01", "2014-01-01", asynchronous=False, show_progress=False)

ds_had = data.as_xarray()

Now our data is in three objects which look like this:

ds_ler

<xarray.Dataset>

Dimensions: (Timestamp: 8760, NEC: 3)

Coordinates:

* Timestamp (Timestamp) datetime64[ns] 2013-01-01T00:30:00 ... 2013-12-31T...

* NEC (NEC) <U1 'N' 'E' 'C'

Data variables:

Longitude (Timestamp) float64 358.8 358.8 358.8 358.8 ... 358.8 358.8 358.8

B_NEC (Timestamp, NEC) float64 1.487e+04 -616.8 ... -561.9 4.862e+04

Radius (Timestamp) float64 6.362e+06 6.362e+06 ... 6.362e+06 6.362e+06

Latitude (Timestamp) float64 59.97 59.97 59.97 59.97 ... 59.97 59.97 59.97

Attributes:

Sources: ['SW_OPER_AUX_OBS_2__20130101T000000_20131231T235959_0148']

MagneticModels: []

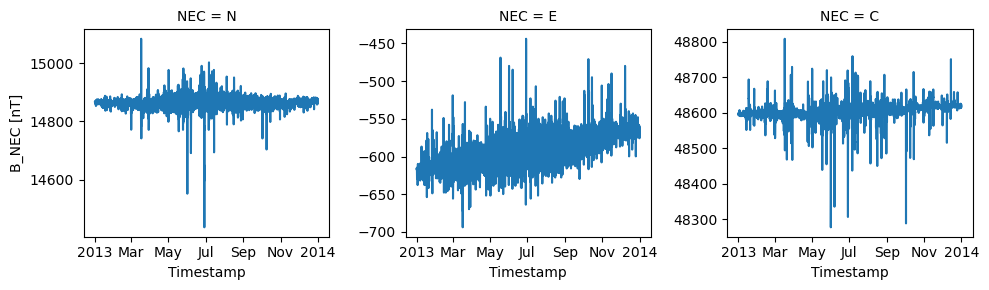

AppliedFilters: []We can quickly preview the data using the xarray plotting tools:

ds_ler["B_NEC"].plot.line(x="Timestamp", col="NEC", sharey=False)

<xarray.plot.facetgrid.FacetGrid at 0x7fd677920110>

Let’s make a more complex figure to display data from all three observatories together. We can use matplotlib directly now to create the figure and pass the xarray objects to it to fill the contents. Note that we slice out a particular vector component with e.g. ds_ler["B_NEC"].sel(NEC="N").

fig, axes = plt.subplots(nrows=3, ncols=3, figsize=(15, 5), sharex="all", sharey="row")

for i, NEC in enumerate("NEC"):

axes[i, 0].plot(ds_ler["Timestamp"], ds_ler["B_NEC"].sel(NEC=NEC))

axes[i, 1].plot(ds_esk["Timestamp"], ds_esk["B_NEC"].sel(NEC=NEC))

axes[i, 2].plot(ds_had["Timestamp"], ds_had["B_NEC"].sel(NEC=NEC))

axes[i, 0].set_ylabel(f"B ({NEC}) [nT]")

axes[0, 0].set_title("LER: Lerwick (60.0°N)")

axes[0, 1].set_title("ESK: Eskdalemuir (55.1°N)")

axes[0, 2].set_title("HAD: Hartland (50.8°N)")

fig.tight_layout()

This shows us the difference in the main field between these locations - further North (Lerwick), the field is pointing more downwards so the vertical component (C) is stronger. We can also see a small secular variation over the year as the field changes.